ℹ️ Available today with LaunchFast Starter Kits

ℹ️ Available today with LaunchFast Starter Kits

In this guide, you will learn how to generate pre-signed URLs for Amazon S3 with Astro on Cloudflare Workers. You will go through the process of setting up a new Astro project, enabling server-side rendering using Cloudflare adapter, obtaining AWS credentials and then creating functions to generate pre-signed URLs for retrieval and upload from Amazon S3.

Prerequisites

To follow along, you will need:

- Node.js 20 or later

- An AWS account

Table Of Contents

- Create a new Astro application

- Integrate Cloudflare adapter in your Astro project

- Create access keys for IAM users

- Configure Amazon S3 Bucket Policy

- Configure Amazon S3 CORS

- Generate the pre-signed URLs

- Deploy to Cloudflare Workers

Create a new Astro application

Let’s get started by creating a new Astro project. Open your terminal and run the following command:

npm create astro@latest my-appnpm create astro is the recommended way to scaffold an Astro project quickly.

When prompted, choose:

Use minimal (empty) templatewhen prompted on how to start the new project.Yeswhen prompted to install dependencies.Yeswhen prompted to initialize a git repository.

Once that’s done, you can move into the project directory and start the app:

cd my-appnpm run devThe app should be running on localhost:4321. Next, execute the command below to install the necessary library for building the application:

npm install aws4fetchThe following library is installed:

aws4fetch: An AWS client for environments that support fetch and SubtleCrypto.

Integrate Cloudflare adapter in your Astro project

To generate pre-signed URLs for each object dynamically, you will enable server-side rendering in your Astro project via the Cloudflare adapter. Execute the following command:

npx astro add cloudflareWhen prompted, choose the following:

Ywhen prompted whether to install the Cloudflare dependencies.Ywhen prompted whether to make changes to Astro configuration file.

You have succesfully enabled server-side rendering in Astro.

To make sure that the output is deployable to Cloudflare Workers, create a wrangler.toml file in the root of the project with the following code:

name = "amazon-s3-astro-workers"main = "dist/_worker.js"compatibility_date = "2025-04-01"compatibility_flags = [ "nodejs_compat" ]

[assets]directory="dist"binding="ASSETS"

[vars]AWS_KEY_ID=""AWS_REGION_NAME=""AWS_S3_BUCKET_NAME=""AWS_SECRET_ACCESS_KEY=""Post that, make sure that you have both an .env file and a wrangler.toml file with the variables defined so that they can be accessed during npm run dev and when deployed on Cloudflare Workers respectively.

Further, update the astro.config.mjs file with the following to be able to access these variables in code programmatically:

// ... Existing imports...import { defineConfig, envField } from 'astro/config'

export default defineConfig({ env: { schema: { AWS_KEY_ID: envField.string({ context: 'server', access: 'secret', optional: false }), AWS_REGION_NAME: envField.string({ context: 'server', access: 'secret', optional: false }), AWS_S3_BUCKET_NAME: envField.string({ context: 'server', access: 'secret', optional: false }), AWS_SECRET_ACCESS_KEY: envField.string({ context: 'server', access: 'secret', optional: false }), } } // adapter})Create access keys for IAM users

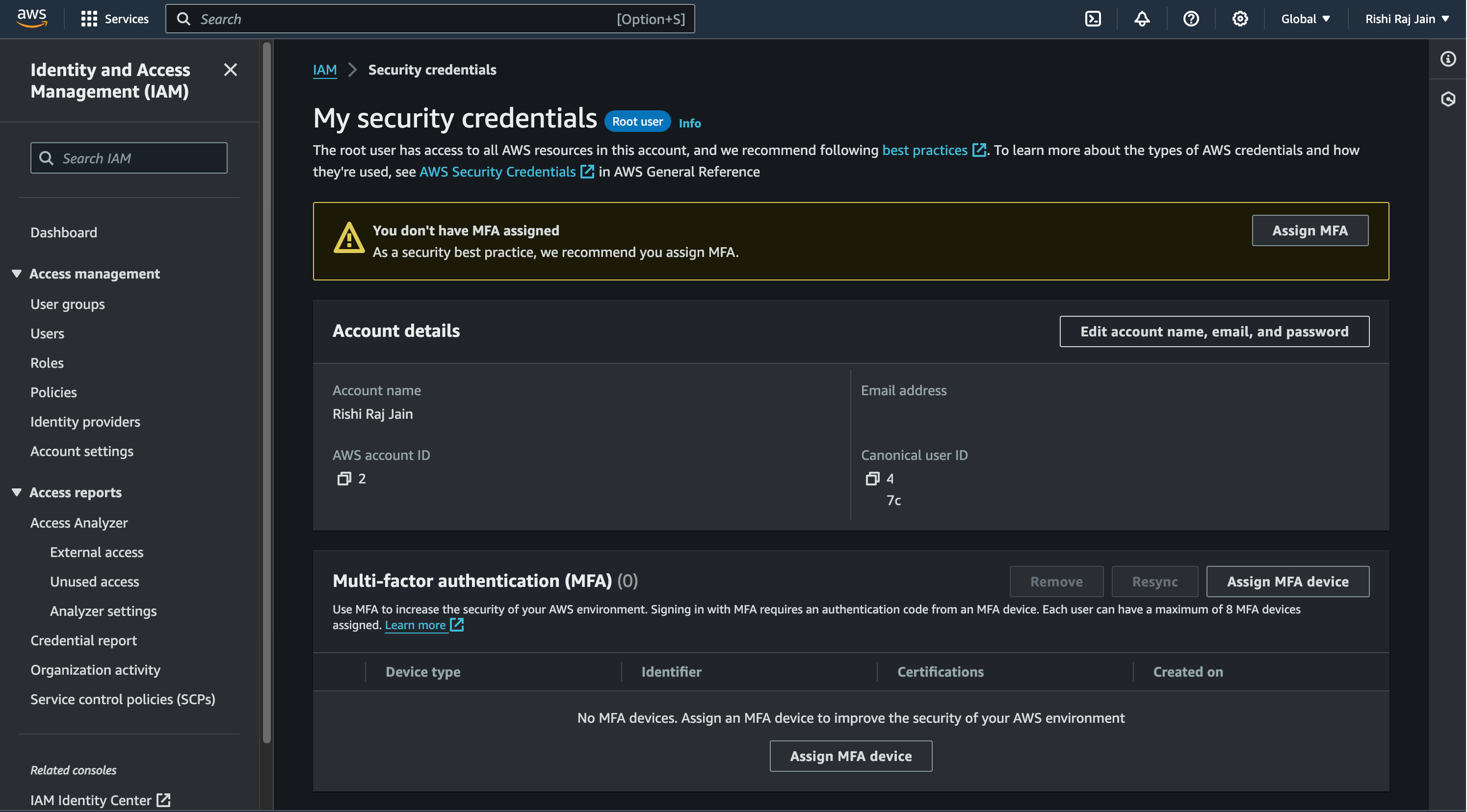

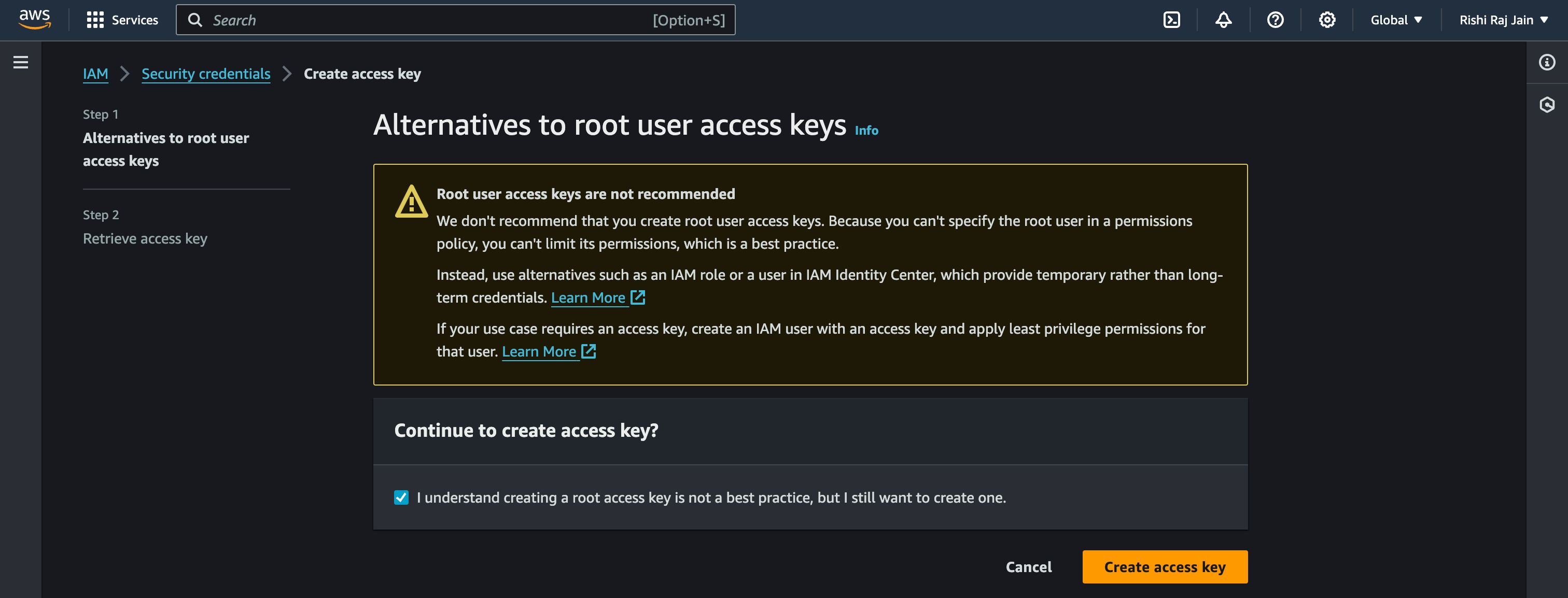

- In the navigation bar on the upper right in your AWS account, choose your user name, and then choose Security credentials.

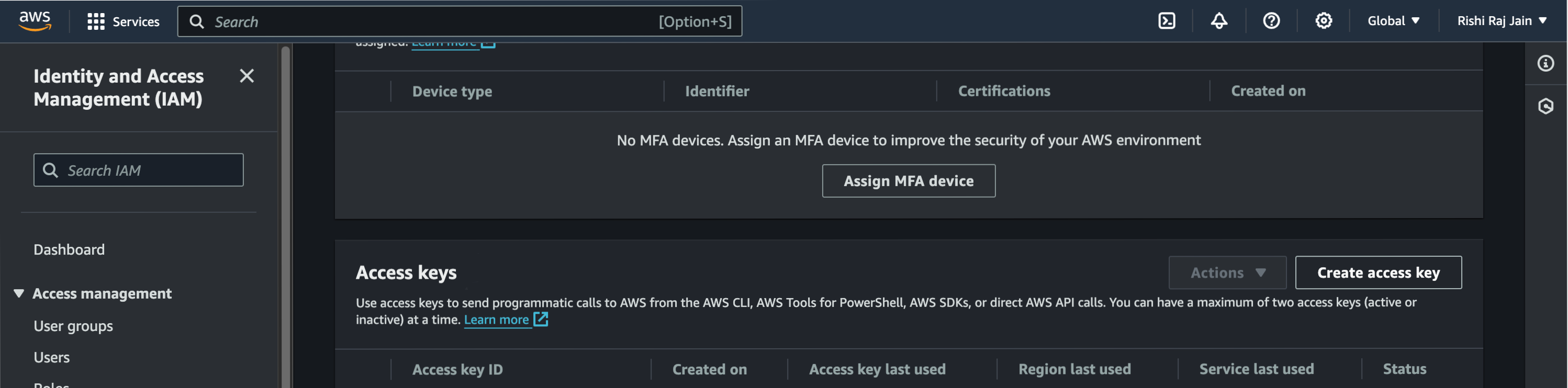

- Scroll down to Access keys and click on Create access key.

- Again, click on Create access key.

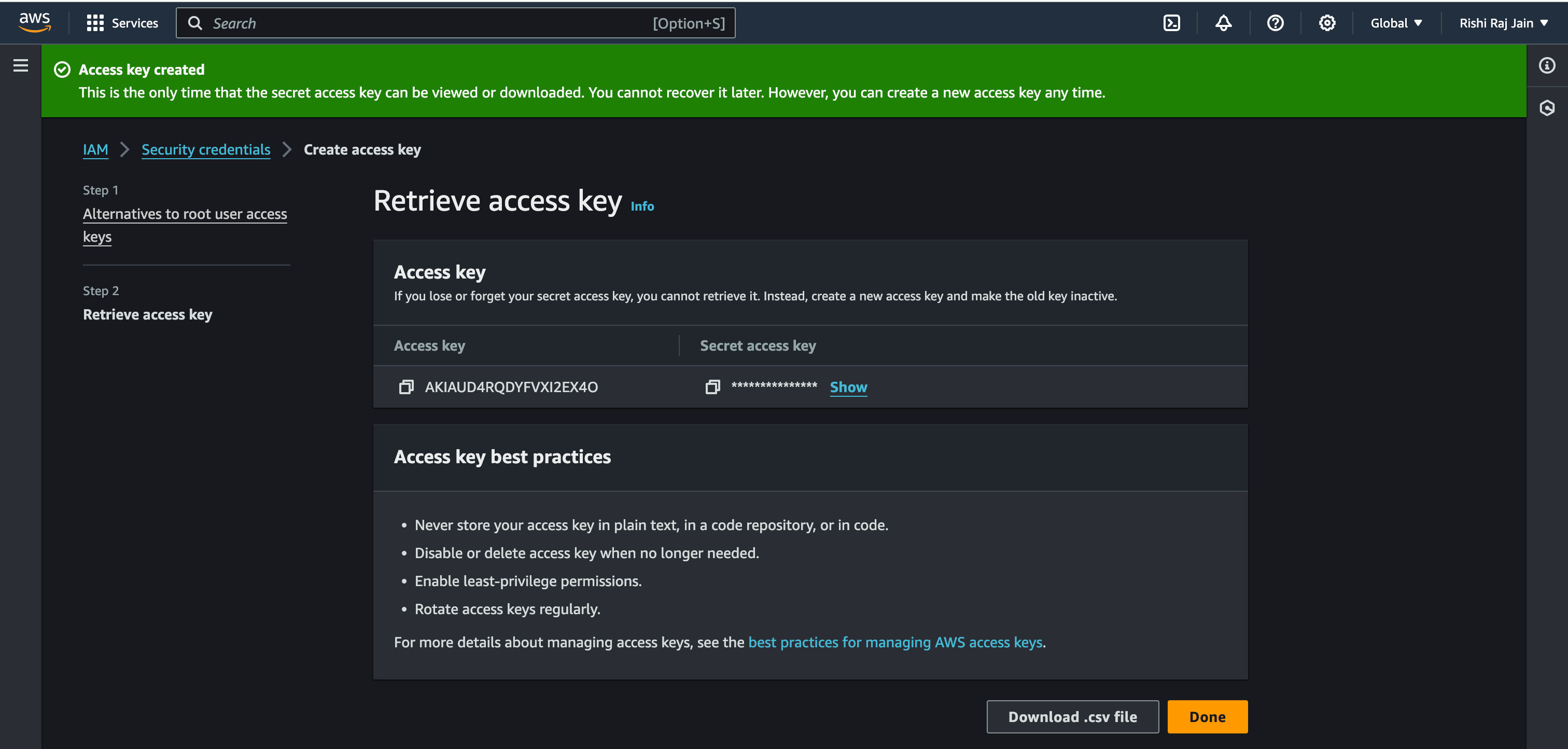

- Copy the Access key and Secret access key generated to be used as

AWS_ACCESS_KEY_IDandAWS_ACCESS_KEY_SECRETrespectively.

Configure Amazon S3 Bucket Policy

To securely share access to an Amazon S3 bucket, we need to configure a bucket policy that explicitly defines who can access the bucket and what actions they are allowed to perform. In this case, we want to grant a specific AWS account permission to both upload (PutObject) and download (GetObject) files from a particular S3 bucket. This is useful when you want another account-such as a partner, a separate environment, or a third-party integration-to programmatically interact with your bucket without making the contents public.

{ "Version": "2012-10-17", "Statement": [ { "Sid": "AuthenticatedReadUploadObjects", "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::<user-id>:root" }, "Action": [ "s3:PutObject", "s3:GetObject" ], "Resource": "arn:aws:s3:::<bucket-name>/*" } ]}In the configuration above, you have restricted the Get and Put operations to the user with the <user-id> ID and for the bucket <bucket-name>.

Configure Amazon S3 CORS

Since you will use pre-signed URLs to upload from client-side (browser) to Amazon S3, it becomes necessary to allow Amazon S3 to know that it should accept uploads from anywhere around the web.

NOTE: You can edit the AllowedOrigins to limit to specific domains, but in this case we’re worried less about the access level since we’re already enforcing IAM authentication while generating the pre-signed URLs.

[ { "AllowedHeaders": [ "*" ], "AllowedMethods": [ "GET", "PUT" ], "AllowedOrigins": [ "*" ], "ExposeHeaders": [], "MaxAgeSeconds": 9000 }]Generate the pre-signed URLs

1. Access the Environment Variables

The first step is to access the necessary environment variables during the runtime to create an AWS Client via aws4fetch. From Astro 5.6 and beyond, the way you want to access runtime environment variables in your code is by using the getSecret function from astro:env/server to keep things provider agnostic. This is crucial for storing sensitive information securely without hardcoding it into your application. You’ll retrieve the following variables:

- AWS Access Key ID

- AWS Region Name

- Amazon S3 Bucket Name

- AWS Secret Access Key

import { getSecret } from 'astro:env/server'

const accessKeyId = getSecret('AWS_KEY_ID')const s3RegionName = getSecret('AWS_REGION_NAME')const s3BucketName = getSecret('AWS_S3_BUCKET_NAME')const secretAccessKey = getSecret('AWS_SECRET_ACCESS_KEY')2. Define the AWS Client

Next, you’ll define the defineAws4Fetch function that creates an AWS client instance using the required environment variables. This function checks if the required AWS credentials are set and returns a new AwsClient instance configured for S3.

import { AwsClient } from 'aws4fetch'

// ...Existing Code...

async function defineAws4Fetch(): Promise<AwsClient> { if (!accessKeyId || !secretAccessKey) { throw new Error(`AWS_KEY_ID OR AWS_SECRET_ACCESS_KEY environment variable(s) are not set.`) } return new AwsClient({ service: 's3', accessKeyId, secretAccessKey, region: s3RegionName, })}3. Determine S3 URLs

You’ll need to generate unique URLs for each file upload to Amazon S3. The getS3URL function below takes care of constructing the correct URL based on the file name, bucket name and the region.

// ...Existing Code...

function getS3URL({ Key }: { Key: string }) { if (!s3BucketName) { throw new Error(`AWS_S3_BUCKET_NAME environment variable(s) are not set.`) } return new URL(`/${Key}`, `https://${s3BucketName}.s3.${s3RegionName}.amazonaws.com`)}4. Pre-signed URL to GET a S3 Object (retrieve)

The getS3ObjectURL function below retrieves an object’s pre-signed URL from Amazon S3. It generates a signed request that allows you to access the file securely.

// ...Existing Code...

export async function getS3ObjectURL(Key: string) { try { const endpointUrl = getS3URL({ Key }) endpointUrl.searchParams.set('X-Amz-Expires', '3600') const client = await defineAws4Fetch() const signedRequest = await client.sign(new Request(endpointUrl), { aws: { signQuery: true } }) return signedRequest.url } catch (e: any) { const tmp = e.message || e.toString() console.log(tmp) return }}5. Pre-signed URL to PUT a S3 Object (upload)

The uploadS3ObjectURL function below is responsible for generating a pre-signed URL for uploading a file to Amazon S3. It follows a similar structure to the getS3ObjectURL function, generating a signed URL that allows you to upload files securely.

// ...Existing Code...

export async function uploadS3ObjectURL(file: { name: string; type: string }) { try { const Key = file.name const endpointUrl = getS3URL({ Key }) endpointUrl.searchParams.set('X-Amz-Expires', '3600') const client = await defineAws4Fetch() const signedRequest = await client.sign(new Request(endpointUrl, { method: 'PUT', headers: { 'Content-Type': file.type } }), { method: 'PUT', aws: { signQuery: true } }) return signedRequest.url } catch (e: any) { const tmp = e.message || e.toString() console.log(tmp) return }}6. Create a Server Endpoint (an API Route) in Astro

import type { APIContext } from 'astro'import { getS3ObjectURL, uploadS3ObjectURL } from '../../storage/s3'

// Define an asynchronous function named GET that accepts a request object.export async function GET({ request }: APIContext) { // Extract the 'file' parameter from the request URL. const url = new URL(request.url) const file = url.searchParams.get('file') // Check if the 'file' parameter exists in the URL. if (file) { try { const filePublicURL = await getS3ObjectURL(file) // Return a response with the image's public URL and a 200 status code. return new Response(filePublicURL) } catch (error: any) { // If an error occurs, log the error message and return a response with a 500 status code. const message = error.message || error.toString() console.log(message) return new Response(message, { status: 500 }) } } // If the 'file' parameter is not found in the URL, return a response with a 400 status code. return new Response('Invalid Request.', { status: 400 })}

export async function POST({ request }: APIContext) { // Extract the 'file' parameter from the request URL. const url = new URL(request.url) const type = url.searchParams.get('type') const name = url.searchParams.get('name') if (!type || !name) return new Response('Invalid Request.', {status:400}) try { // Generate an accessible URL for the uploaded file // Use this url to perform a GET to this endpoint with file query param valued as below const publicUploadUrl = await uploadS3ObjectURL({ type, name }) // Return a success response with a message return new Response(publicUploadUrl) } catch (error: any) { // If there was an error during the upload process, return a 403 response with the error message const message = error.message || error.toString() console.log(message) return new Response(message, { status: 500 }) }}Deploy to Cloudflare Workers

To make your application deployable to Cloudflare Workers, create a file named .assetsignore in the public directory with the following content:

_routes.json_worker.jsNext, you will need to use the Wrangler CLI to deploy your application to Cloudflare Workers. Run the following command to deploy:

npm run build && npx wrangler@latest deployReferences

Conclusion

In this blog post, you learned how to integrate Amazon S3 with Astro and Cloudflare Workers for file uploads and retrieval. By following the implementation steps, you can securely upload and retrieve files from Amazon S3, ensuring that your web application has a robust and flexible storage solution.

If you would like to explore specific sections in more detail, expand on certain concepts, or cover additional related topics, please let me know, and I’ll be happy to assist!